I learned much of my natural language processing using Python’s `nltk` library which, coupled with the nltk book (https://www.nltk.org/book/), provides a great introduction to the topic. When I hit industry, however, I never really found a use for it, nor motivate myself to learn the intricacies of creating a corpus from my own dataset. Many of the functions could be obtained from other sources (e.g., `scikit-learn`) or hand-coded (e.g., ngrams). I’m certainly not doing the library justice, but it seemed as though `nltk` required an ecosystem that I wasn’t quite committed to.

The spaCy ecosystem, in contrast, required very little investment to get started. First, install the package and download a language pack.

pip install spacy

python -m spacy download en_core_web_sm

- If you’re on Windows and haven’t yet installed VS Tools, that’s probably a prerequisite.

en_core_web_smwill download an English (en) model trained on web (web) data, but the smaller (sm) one — perhaps less training data or missing components. Find a list of languages/models here: https://spacy.io/models.- If for whatever reason you can’t download with the spacy command (and have spaCy installed):

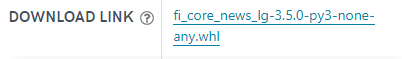

- Manually download the wheel file (e.g., from https://spacy.io/models/fi) and use the ‘Download Link’.

- Run

pip install <model>

pip install fi_core_news_lg-3.5.0-py3-none-any.whl.Now, you’re ready to go with spaCy, and whatever you want to do in the language, we can always start with the same pattern:

- Read your target text as a Python `str`.

- Run the spacy pipeline/model.

- Do something with the results.

spaCy doesn’t care how your data is stored — files, database, CSV, etc. — just build your own custom method for reading in the text using pathlib, sqlalchemy, csv, etc. Then, run that extracted text through the spaCy pipeline (we’ll just use the default), before determining how to use the data. Let’s look at some code:

import spacy

text = 'Colorless green ideas are sleeping furiously.'

nlp = spacy.load('en_core_web_sm')

doc = nlp(text)

print(f'{"token":15}\t{"lemma":15}\t{"dep":15}\t{"head":15}\t{"pos":15}\t{"tag":15}')

for token in doc: # iterate through each found token

print(f'{str(token):15}\t{token.lemma_:15}\t{token.dep_:15}\t{str(token.head):15}\t{token.pos_:15}\t{token.tag_:15}')

In the printed output, you can see a number of the linguistic elements determined for each token in the input text. These include the original token (token column), the lemma (i.e., the base form of the word), its role in a dependency parse, part of speech (pos), etc. Each of these can be used in developing an algorithm. For example, to build ngrams, we might choose to omit punctuation tokens (e.g., dep/pos=’punct’) and retain the lemmatized form. (This is, e.g., done in the spacy-ngram library.)

token lemma dep head pos tag

Colorless Colorless nmod ideas PROPN NNP

green green amod ideas ADJ JJ

ideas idea nsubj sleeping NOUN NNS

are be aux sleeping AUX VBP

sleeping sleep ROOT sleeping VERB VBG

furiously furiously advmod sleeping ADV RB

. . punct sleeping PUNCT .

This example is not provided in a generalizable form. To apply this to our corpus, we can imagine using some CSV file with text stored in the ‘text’ column, and the other columns containing some sort of metadata. We’ll take the same pattern from above, though process on a sentence by sentence (rather than ignoring sentences).

We can create the corpus reader. Note that I am returning more than just the text as we may need the other metadata to determine the source.

def read_corpus_from_csv(file):

with open(file) as fh:

for row in csv.DictReader(fh):

text = row.pop('text', '')

yield text, row # text:str, row:dict with metadata

And then we modify our code in a few ways to efficiently handle this corpus:

- Provide an output CSV file for the created tokens.

- Use the `doc.sents` to iterate through the sentences

- Use `nlp.pipe` to more quickly read the elements from our corpus

import csv

from pathlib import Path

import spacy

nlp = spacy.load('en_core_web_sm')

path = Path('corpus.csv')

outpath = Path('corpus_out.csv')

with open(outpath, 'w', newline='') as fh:

writer = csv.writer(fh)

# write header row

writer.writerow(['token', 'lemma', 'dep', 'head', 'pos', 'tag'])

for i, (doc, context) in enumerate(nlp.pipe(read_corpus_from_csv(path), as_tuples=True)):

for sentence_num, sentence in enumerate(doc.sents):

for token in sentence:

writer.writerow([str(token), token.lemma_, token.dep_, str(token.head), token.pos_, token.tag_])

In a real-world application, we’d probably want to manipulate the variables in some way, or at least include the document id and sentence number so that subsequent processes could know which bit of text produced which output. That aside, this is all that it takes to get started with spaCy, though it is wardrobe door that leads to a whole new world…