My first attempt to code with a chatbot was several years ago and involved using ChatGPT to do a couple data transformations using pandas. The dataset was not large. My procedure was something like:

- State the problem and how I want the problem solved.

- Wait for the text/code to get generated.

- Copy-paste the code from the chatbot to my editor.

- Run.

- If an error is encountered, copy-paste error into the chatbot and repeat from #1.

Regarding #3, I think I stared by trying to type it out myself — the idea of making sure I understood what was going on — but eventually resorted to copy-paste since the code didn’t end up working. The total time spent was about 2 hours on a Friday before I had a working solution. I felt efficient, like I was solving a hard problem but with an added superpower. Having the chatbot fix my errors felt empowering.

Unfortunately, despite all of my feelings, the code didn’t work. After a couple hours, I had working code (about 20 lines) which involved an incredibly inefficient function called by df.apply(func). I then decided to manually (i.e., chatbot-less) refactor my code. I soon found that I didn’t need the function and got it down to about 3-4 lines of code after about 20 minutes of work — and, most of the work involved trying to figure out what the chatbot code was actually doing.

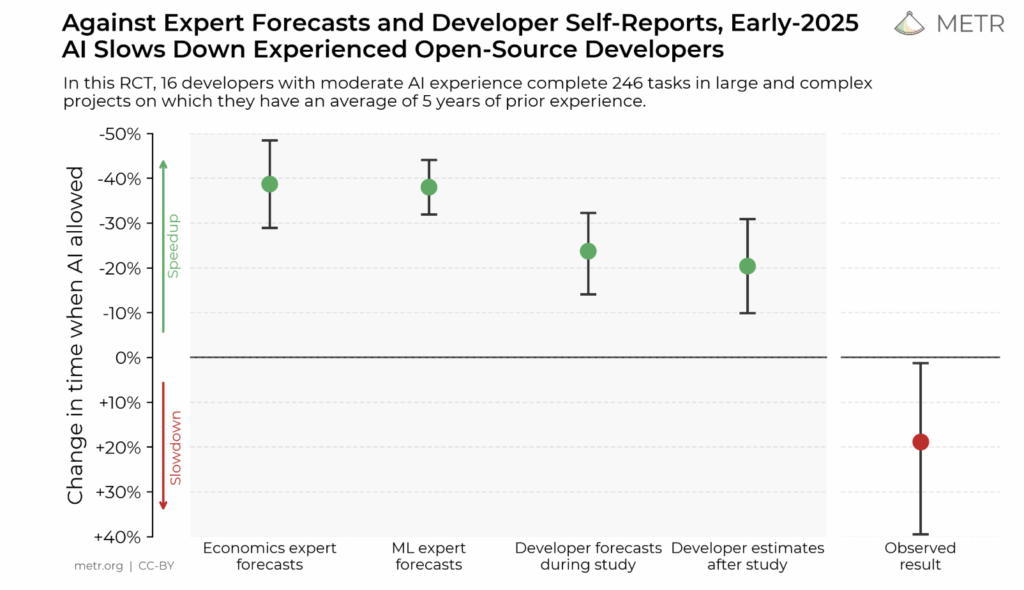

As one might expect, I walked away from this encounter dubious of the benefits of such chatbot interactions. As I have related on this blog, I began to use chatbots as a useful search tool, but avoided their use for writing code (except for writing examples). My early impressions were validated in a July 2025 publication by METR (Model Evaluation and Threat Research). The research was intended to assess the gains offered by chatbots in supporting coding — the universal assumption was their ability to provide speed and efficiency boosts. In interviewing the participants (open-source maintainers) before and after their efforts, they found that the participants believed that the chatbots would help them and had helped them improve their efficiency. The data suggested otherwise.

The sample size is relatively small, but from my own experience and observations of other users, these results make sense. To put in practical terms, the developers expected that a 60-minute task would only take 50 minutes with generative AI, but it actually took 70 minutes; or, a 1-week (i.e., 5-day) task was expected to take only 4-days, but ended up taking 6-days.

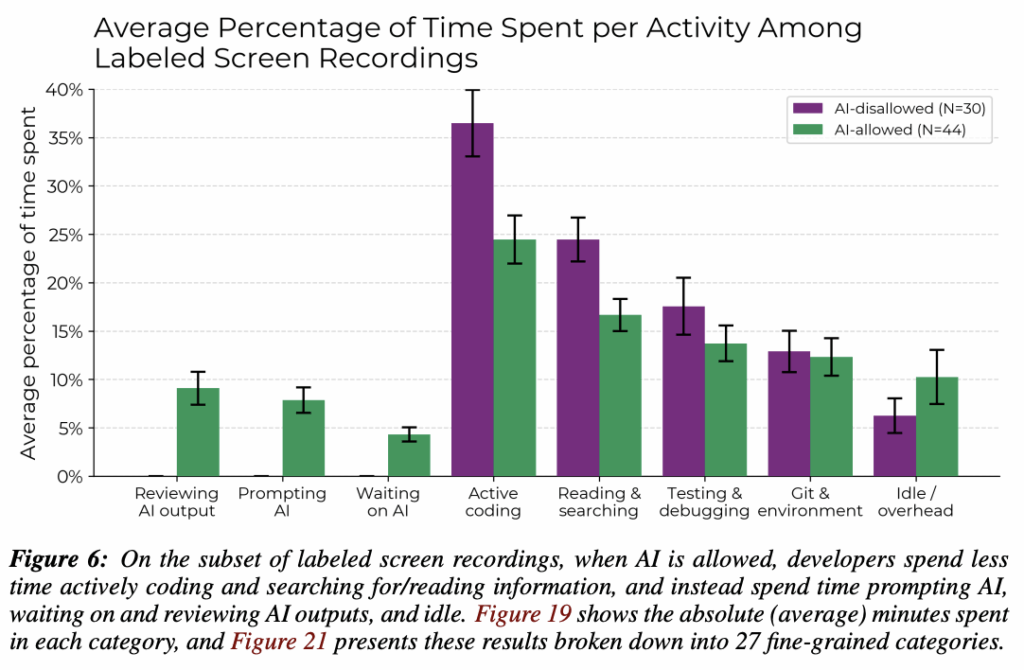

I found Figure 6 to be the most interesting. Here, the purple bars show where chatbots were not used and the green bars where chatbots were allowed. What do you notice?

I have a few observations. First, I have found ‘prompting AI’ one of the best exercises ever. It has been called ‘rubber-ducking’ and involves the process of actually formulating your ideas and putting them into words. I have written a number of prompts where I am able to solve my problems halfway through writing the prompt when I have arrived at the solution. Even if it’s not done in a terminal, just attempting to articulate a problem in Notepad is incredibly useful.

Second, the amount of idle time when chatbots were allowed is incredible. 5% of the time was spent waiting — i.e., disengaged from the problem — and 15% overall not actively engaged in the task. I wonder if this is why it feels so efficient, i.e., the lack of effort? Anyway, this 15% seems to account for most of the time difference.

Third, why is there so much time for those with chatbot access spent in reading & searching? I would have thought this could be largely done within the chatbot. Perhaps its linking to external documentation?

Fourth, I’d like to see more granularity between testing and debugging and the purpose of using the chatbot (e.g., for debugging vs generating code). For example, did chatbot users have to debug less? Did they write fewer tests (e.g., because they were less engaged)? (n.b., these figures are broken down further in Figure 20 in the Appendix. but there appears to be some issue with the labels…)

Here are my takeaways:

- If using chatbots, endeavour to reduce idle time (i.e., stay engaged).

- Don’t watch the incremental output: trying to solve the problem on your own.

- Don’t use a chatbot to generate code you already know how to write (<44% of code recommendations accepted) — it’s far easier to write and edit your own code than someone else’s.

- Use chatbots as a search tool — they can combine search results faster than a person and generate examples.

- Use chatbot prompts as a way to explore what you want to do (even if you never submit the prompt).

I recently had the opportunity to explore using a chatbot when updating a website I’d built almost a decade ago. The website was built with an Angular frontend and Python (Flask) backend with data stored in a SQL Server. My goal was to update the frontend, update the backend, and add some features to the frontend. While I can code Python in my sleep, I don’t really know Angular, and most of the requested features would require use of Angular. This is where I decided to rely heavily on a chatbot.

I used chatbots for three purposes:

- Writing/modifying Angular code (e.g., ‘How do I do X in Angular?’ and ‘Change this class so that it does Y.’).

- Debugging Angular code (e.g., ‘I’m getting the error X in the web development console — how do I fix it?’).

- Seaching (e.g., ‘What is X?’ What does Y mean?).

When I engaged in any of these activities, I would do my best to not watch the generating output, but instead to:

- Search for the answer/content myself.

- Think about the issue.

- Plan what seem like reasonable next steps.

I hoped that this would keep me focused on the issues at hand and prevent my loss of focus or doom-spiral into idleness.

I didn’t have any controls, but I felt I did a decent job of reducing my idletime and staying engaged. The whole process was effortful, and I felt like we were more of a team rather than my using a tool. Without a control, it’s difficult to prove that I haven’t deluded myself like the open source programmers in the METR study…

There is one area, however, where I found chatbots to absolutely SHINE: generating CSS. I’ve never really learned CSS, and typically spend hours trying to get something to work. Based on my own past experience, I know that this sped up my CSS writing (e.g., I could prompt ‘generate CSS to make this html page look better’ then copy-paste, refresh, and done). The only issue which came up was styling certain PrimeNG elements. I’m not sure if I did this correctly, however, I found that the solution was often only arrived at when both myself and the chatbot ‘worked together‘. E.g., in my own searching, I found the underlying issue or problem and was able to tell the chatbot what elements to use, etc. I would imagine a lot of the issues with PrimeNG styling is due to changes in the API version (i.e., most of the chatbot’s data was on older versions) and the relative rarity of PrimeNG styling on the internet (at least, compared with something like Python). I could basically copy-paste the details from the documentation and quickly experiement with the CSS….just please don’t read it…maybe not understanding this part is okay?